Brief Introduction to Naive Bayes Classifier

Naive Bayes classifier is one of the text classifiers in the NLTK.

Ref : https://www.nltk.org/book/ch01.html

In machine learning, a Bayes classifier is a simple probabilistic classifier, which is based on applying Bayes’ theorem. The feature model used by a naive Bayes classifier makes strong independence assumptions. This means that the existence of a particular feature of a class is independent or unrelated to the existence of every other feature.

Naive Bayes simplifies the calculation of probabilities by assuming that the probability of each attribute belonging to a given class value is independent of all other attributes. This is a strong assumption but results in a fast and effective method.

The probability of a class value given a value of an attribute is called the conditional probability. By multiplying the conditional probabilities together for each attribute for a given class value, we have a probability of a data instance belonging to that class.

To make a prediction we can calculate probabilities of the instance belonging to each class and select the class value with the highest probability.

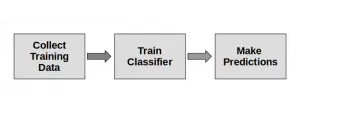

Algorithm:

P(A/B) = P(B/A) * P(A) / P(B)

- A is called the proposition and is called the evidence

- P(A) is called the prior probability of proposition and P(B) is called the prior probability of evidence

- P(A/B) is called the posterior

- P(B/A) is the likelihood

Sample Examples:

Navi Byes Classifier is mainly used for the text parsing, below the code is for text parsing and apply Sentiment Analysis with Python NLTK Text Classification.

Ex1:

#import the packeges

import nltk

from nltk.tokenize import word_tokenize

import json

from pprint import pprint

import csv

with open('articleData.json') as data_file:

data = json.loads(data_file.read())

x =data["clopidogrel"]

abstractNameList=[]

abstractTitleList=[]

#Iterating the article data

for i in range(len(x)):

abstractNameList.append(data["clopidogrel"][i]["abstractName"])

abstractTitleList.append(data["clopidogrel"][i]["articleTitle"])

#Read the abName List

for abstractName in abstractNameList:

abstractNameData = {}

abstractNameData['abstractName'] = abstractName

json_data1 = json.dumps(abstractNameData)

print(json_data1)

print("............")

#Read the abTitleList

for abstractTitle in abstractTitleList:

abstractTitleData = {}

abstractTitleData['abstractTittle'] = abstractTitle

json_data = json.dumps(abstractTitleData)

print(json_data)

print(".......Before Filter Data.....")

print(" ")

#Trained Data

train = [("Antiplatelet is given for males.", "pos"),

("Effect of cardiovascular on adults in 12 months", "pos"),

("Heavy dose of drug is not good for the health", "pos"),

("Among adults and low weight patients there is a complication of high bleeding.", "neg"),

("There is a high risk of recurrent stroke in patients who take antiplatelet therapy", "neg"),

("In acute coronary there is definitely an uncontrollable blood flow"),

]

dictionary = set(word.lower() for passage in train for word in word_tokenize(passage[0]))

t = [({word: (word in word_tokenize(x[0])) for word in dictionary}, x[1]) for x in train]

classifier = nltk.NaiveBayesClassifier.train(t)

for abName in abstractNameList:

test_data = abName

test_data_features = {word.lower(): (word in word_tokenize(test_data.lower())) for word in dictionary}

if classifier.classify(test_data_features) == "neg":

abstractNameList.remove(abName)

else:

data = {}

data['abstractName'] = abName

json_data = json.dumps(data)

print(json_data)

print("..................After Filter Data.....................")

Below the example code for finding the maximum probability of the +ve and _ve in each article, including with Sentiment Analysis using python.

Ex2:

import json

import nltk

from nltk.tokenize import word_tokenize

from textblob import TextBlob

from textblob.classifiers import NaiveBayesClassifier

from nltk.sentiment.vader import SentimentIntensityAnalyzer

with open('articleData.json') as data_file:

data = json.loads(data_file.read())

x =data["articles"]

abstractNameList=[]

for i in range(len(x)):

text=data["articles"][i]["abstractName"]+' '

text+=data["articles"][i]["articleTitle"]

abstractNameList.append(text)

train = [

('physiological effect on normal people','pos'),

('there will be too much bleeding among adults and weak patients','pos'),

('Tphysiological effect on people', 'pos'),

('Volunteers for health care', 'pos'),

('antiplatelet is given for males', 'pos'),

('women prefer treatement', 'pos'),

('effect of cardiovascular on adults in 12 months', 'pos'),

('either one of reversible or irrerversible events definitely occurs', 'neg'),

('for antiplatelet, the effects or symptoms changes depending on the gender', 'neg'),

('among adults and low weight patients there is a complication of high bleeding','neg')

]

cl = NaiveBayesClassifier(train)

dictionary = set(word.lower() for passage in train for word in word_tokenize(passage[0]))

t = [({word: (word in word_tokenize(x[0])) for word in dictionary}, x[1]) for x in train]

classifier = nltk.NaiveBayesClassifier.train(t)

data2=[]

for sen in abstractNameList:

#Probability

probdist = cl.prob_classify(sen).max()

print(probdist)

#TextBlob classifier

blob = TextBlob(sen,classifier=cl)

result=blob.classify()

print("textblob classifiers result ===> " +result)

if(result=='pos'):

item = {"ArticleName": sen}

data2.append(item)

#Sentiment Analysys

sid = SentimentIntensityAnalyzer()

ss = sid.polarity_scores(sen)

for k in sorted(ss):

print('{0}: {1}, '.format(k, ss[k]), end='')

print()

#NLTK Classifier

test_data =sen

test_data_features = {word.lower(): (word in word_tokenize(test_data.lower())) for word in dictionary}

print ("nltk classifiers result ===> " +classifier.classify(test_data_features))

print()

print()

data1 = {"article":data2}

with open('data.json', 'w') as outfile:

json.dump(data1, outfile)

Comments (0)